There is a quiet but important misunderstanding surrounding artificial intelligence today. AI is often spoken about as if it were a kind of digital sorcery—feed it data, and insight appears; deploy a model, and intelligence emerges. This framing is not just inaccurate, it is dangerous. It obscures the real source of value and leads to poor architectural decisions, misplaced expectations, and fragile systems.

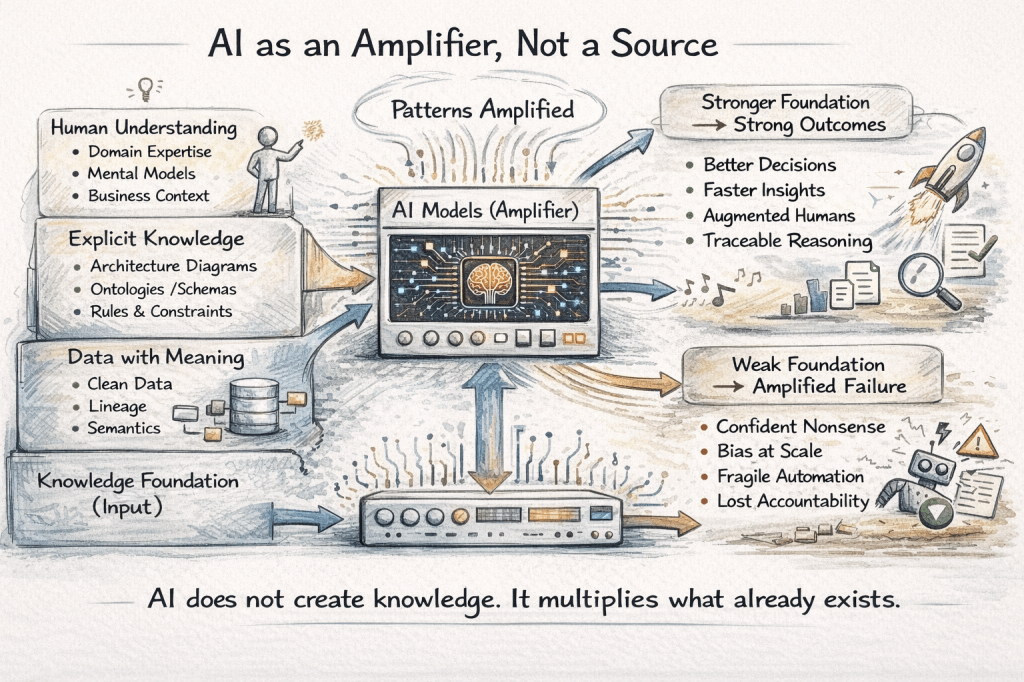

AI does not create knowledge out of nothing. It amplifies what already exists.

Understanding this distinction is essential for anyone building serious systems, making long-term technology decisions, or integrating AI into products that must survive real-world complexity.

The Illusion of Intelligence

Modern AI systems are impressive. Large language models write coherent text, vision models recognize patterns at scale, and recommendation engines predict behavior with uncanny accuracy. From the outside, this looks like intelligence.

But what we are seeing is structured pattern amplification, not understanding.

AI systems operate within boundaries defined by:

- The quality and structure of data they are trained on

- The assumptions embedded in model architectures

- The objectives and loss functions chosen by humans

- The constraints of deployment environments

When these foundations are weak, AI confidently amplifies the weakness.

This is why AI can be eloquent and wrong at the same time.

Knowledge Precedes Intelligence

Before AI can “reason,” something more basic must exist: knowledge representation.

Consider what happens inside a mature organization:

- Business rules exist in documents, code, and people’s heads

- Domain relationships are implicit, fragmented, and inconsistent

- Historical decisions are rarely formalized

- Context lives in emails, tickets, spreadsheets, and tribal memory

Introducing AI into this environment does not resolve ambiguity. It magnifies it.

If your organization does not understand its own systems, AI will not suddenly understand them on your behalf.

AI works best when:

- Concepts are defined clearly

- Relationships are explicit

- Data has lineage and semantics

- Decisions are traceable

In other words, AI amplifies clarity, not confusion.

Amplification Works Both Ways

This is the uncomfortable truth:

AI amplifies good knowledge and bad assumptions equally well.

If your data reflects bias, AI scales bias.

If your metrics reward speed over correctness, AI optimizes recklessness.

If your architecture is brittle, AI accelerates failure.

This is why AI initiatives fail not because models are weak, but because foundations are shallow.

Organizations often ask:

“Which model should we use?”

A better question is:

“What do we actually know, and how well do we know it?”

From Automation to Augmentation

The most successful AI systems are not built to replace thinking, but to extend it.

In practice, this means:

- AI surfaces patterns humans would miss

- Humans provide judgment where context matters

- Systems are designed for explanation, not mystique

- Decisions remain accountable

This is the difference between automation and augmentation.

AI as automation says:

“Let the system decide.”

AI as knowledge amplification says:

“Let the system show us what we couldn’t see—then decide better.”

Architecture Matters More Than Models

There is a tendency to treat AI as a layer you “add on top” of systems. This usually fails.

In durable architectures:

- Knowledge is modeled explicitly (schemas, ontologies, graphs)

- Data pipelines are observable and versioned

- Decisions can be traced back to inputs

- AI components are modular, replaceable, and auditable

In fragile architectures:

- AI models compensate for missing structure

- Business logic leaks into prompts

- Outputs are trusted without understanding

- Failures are discovered only after impact

The difference is not tooling. It is philosophy.

The Human Still Matters

One of the most subtle risks of AI is the erosion of human understanding.

When systems appear to “just work,” teams stop asking:

- Why does this output make sense?

- What assumptions are being made?

- Where could this break?

- Who is responsible if it does?

Mature AI systems are designed to preserve human insight, not replace it.

The goal is not to remove humans from the loop, but to upgrade the loop.

AI as a Multiplier, Not a Mind

A useful mental model is this:

AI is a force multiplier for existing knowledge systems.

If the base is strong, the multiplier creates leverage.

If the base is weak, the multiplier accelerates collapse.

This explains why small, well-structured teams often outperform larger organizations with more data and more models. They understand their systems deeply enough for AI to be effective.

The Quiet Advantage

Organizations that treat AI as magic tend to chase novelty:

- New models

- Bigger parameters

- Louder demos

Organizations that treat AI as a knowledge amplifier invest in:

- Conceptual clarity

- Data semantics

- Decision traceability

- Architectural integrity

The second group moves slower at first—but compounds faster.

And that compounding effect is where real advantage lies.

Closing Thought

AI is not here to replace thinking.

It is here to reward those who already think clearly.

When knowledge is explicit, structured, and respected, AI becomes transformative.

When it is not, AI merely makes confusion faster and more convincing.

The future does not belong to those who believe in AI magic.

It belongs to those who understand what is being amplified—and why.

Leave a comment